2. Installation¶

This chapter will describe how to get, compile and run the software.

ESPResSo releases are available as source code packages from the homepage [1]. This is where new users should get the code. The code within release packages is tested and known to run on a number of platforms. Alternatively, people who want to use the newest features of ESPResSo or start contributing to the software can instead obtain the current development code via the version control system software [2] from ESPResSo’s project page at GitHub [3]. This code might be not as well tested and documented as the release code; it is recommended to use this code only if you have already gained some experience in using ESPResSo.

Unlike most other software, no binary distributions of ESPResSo are available, and the software is usually not installed globally for all users. Instead, users should compile the software themselves. The reason for this is that it is possible to activate and deactivate various features before compiling the code. Some of these features are not compatible with each other, and some of the features have a profound impact on the performance of the code. Therefore it is not possible to build a single binary that can satisfy all needs. For performance reasons a user should always activate only those features that are actually needed. This means, however, that learning how to compile is a necessary evil. The build system of ESPResSo uses CMake to compile software easily on a wide range of platforms.

Users who only need a “default” installation of ESPResSo and have a GitHub account can build the software automatically in the cloud and directly go to section Using Codespaces.

2.1. Quickstart¶

Installing ESPResSo usually involves the following steps:

downloading the source code: one of the stable releases (.zip files, or .tar.gz files before 2025) or the development version (

git clone -b python https://github.com/espressomd/espresso.git)installing dependencies on Ubuntu, macOS, Windows, or other Linux distributions

building the application: default build (Quick installation) or custom build (requires Configuring)

A troubleshooting guide is available on the project GitHub wiki, featuring contributed patches for compiler-related and library-related issues.

2.2. Requirements¶

The following tools and libraries, including their header files, are required to be able to compile and use ESPResSo:

- CMake¶

The build system is based on CMake version 3 or later [4].

- C++ compiler¶

The C++ core of ESPResSo needs to be built by a C++20-capable compiler. The build system will identify the compiler toolchain version and warn if it is unsupported.

When using Clang-based compiler toolchains with the GCC C++ library, extra compiler and linker flags may be required for the compiler toolchain to select a supported libstdc++ version. On HPC clusters where the main compiler toolchain picks up the operating system’s default GCC version, the issue is sometimes resolved by simply module loading both the main compiler toolchain and a recent GCC compiler toolchain.

- Boost¶

A number of advanced C++ features used by ESPResSo are provided by Boost. The Boost.MPI component is required. On HPC clusters where Boost is packaged without Boost.MPI, one has to build Boost from sources.

- FFTW¶

For some algorithms like P3M, ESPResSo needs the FFTW library version 3 or later [5] for Fourier transforms, including header files. ESPResSo leverages heFFTe [Ayala et al., 2020].

- CUDA¶

For some algorithms like P3M and lattice-Boltzmann, ESPResSo provides GPU-accelerated implementations for NVIDIA GPUs. CUDA 12.0 or later [6] is required.

- MPI¶

An MPI library that implements the MPI standard version 1.2 is required to run simulations in parallel. ESPResSo is currently tested against Open MPI and MPICH, with and without UCX enabled. Other MPI implementations like Intel MPI should also work, although they are not actively tested in ESPResSo continuous integration.

Open MPI version 4.x is known to not properly support the MCA binding policy “numa” in singleton mode on a few NUMA architectures. On affected systems, e.g. AMD Ryzen or AMD EPYC, Open MPI halts with a fatal error when setting the processor affinity in

MPI_Init. This issue can be resolved by setting the environment variableOMPI_MCA_hwloc_base_binding_policyto a value other than “numa”, such as “l3cache” to bind to a NUMA shared memory block, or to “none” to disable binding (can cause performance loss).- OpenMP¶

A compiler toolchain that implements the OpenMP standard version 5.0 is required to run simulations with shared-memory parallelization. ESPResSo leverages Kokkos [Trott et al., 2022] and Cabana [Slattery et al., 2022].

- Python¶

ESPResSo’s main user interface relies on Python 3.

We strongly recommend using Python environments to isolate packages required by ESPResSo from packages installed system-wide. This can be achieved using venv [7], conda [8], uv [9], or any similar tool. Inside an environment, commands of the form

sudo apt install python3-numpy python3-scipycan be rewritten aspython3 -m pip install numpy scipy, and thus do not require root privileges. Whenever this documentation refers torequirements.txt, it is the one located in the top-level directory of the project.Depending on your needs, you may choose to install all ESPResSo dependencies inside the environment, or only the subset of dependencies not already satisfied by your workstation or cluster. For the exact syntax to create and configure an environment, please refer to the tool documentation.

- Cython¶

Cython is used for connecting the C++ core to Python.

Python environment tools may allow you to install a Python executable that is more recent than the system-wide Python executable. Be aware this might lead to compatibility issues if Cython accidentally picks up the system-wide

Python.hheader file. In that scenario, you will have to manually adapt the C++ compiler include paths to find the correctPython.hheader file.

2.2.1. Installing requirements on Ubuntu¶

To compile ESPResSo on Ubuntu 24.04 LTS, install the following build dependencies:

sudo apt install build-essential cmake cmake-curses-gui python3-dev openmpi-bin \

libboost-all-dev libfftw3-dev libfftw3-mpi-dev libhdf5-dev libhdf5-openmpi-dev \

python3-pip libgsl-dev freeglut3-dev

To run ESPResSo, install the following Python dependencies:

python3 -m venv espresso_env # here other virtual environment tools are also ok

. espresso_env/bin/activate

python3 -m pip install -c requirements.txt \

cmake cython numpy scipy packaging setuptools h5py

These are the only hard requirements. In the following subsections, only optional dependencies will be discussed. Unless you need extra features, you can jump directly to the next section Quick installation.

2.2.1.1. Nvidia GPU acceleration¶

If your computer has an Nvidia graphics card and you would like to leverage ESPResSo’s GPU algorithms, you should also download and install the CUDA SDK:

sudo apt install nvidia-cuda-toolkit

If you cannot install this package, for example because you are maintaining multiple CUDA versions, you will need to configure the binary and library paths before building the project, for example via environment variables:

export CUDA_TOOLKIT_ROOT_DIR="/usr/local/cuda-12.8"

export PATH="${CUDA_TOOLKIT_ROOT_DIR}/bin${PATH:+:$PATH}"

export LD_LIBRARY_PATH="${CUDA_TOOLKIT_ROOT_DIR}/lib64${LD_LIBRARY_PATH:+:$LD_LIBRARY_PATH}"

or alternatively via CMake-specific environment variables (e.g. ENV{CUDACXX}, ENV{CUDAARCHS}, ENV{CUDAHOSTCXX}) or options (e.g. -D CUDAToolkit_ROOT, -D CMAKE_CUDA_HOST_COMPILER).

Later in the installation instructions, you will see CMake commands of the form

cmake .. with optional CUDA-related options and environment variables,

such as CUDACXX=/usr/lib/nvidia-cuda-toolkit/bin/nvcc cmake .. -D ESPRESSO_BUILD_WITH_CUDA=ON

to activate CUDA. These commands may need to be adapted depending on which

operating system and CUDA version you are using.

You can control the list of CUDA architectures to generate device code for.

For example, CUDAARCHS="75;86" cmake .. -D ESPRESSO_BUILD_WITH_CUDA=ON

will generate device code for both sm_75 and sm_86 architectures.

The CMake option ESPRESSO_CMAKE_CUDA_ARCHITECTURES achieves the same effect.

Both take a semicolon-separated list of integers. There are online resources

to help determine which architecture match specific hardware [12].

The CMake option CMAKE_CUDA_ARCHITECTURES cannot be used to set CUDA

architectures, because it has a default value that is too old for the

minimally required CUDA version.

On Ubuntu 24.04, the default GCC compiler may be too recent for nvcc 12.0. You can either use GCC 12 or alternatively install Clang 19 as a replacement for nvcc and GCC. If the NVIDIA HPC SDK is installed, the NVHPC toolchain can be used with GCC 12.

CC=gcc-12 CXX=g++-12 CUDACXX=/usr/lib/nvidia-cuda-toolkit/bin/nvcc cmake .. \

-D ESPRESSO_BUILD_WITH_CUDA=ON \

-D CUDAToolkit_ROOT=/usr/lib/nvidia-cuda-toolkit \

-D CMAKE_CUDA_HOST_COMPILER=g++-12

with compiler dependencies:

sudo apt install gcc-12 g++-12 libstdc++-12-dev

CC=clang-19 CXX=clang++-19 CUDACXX=clang++-19 cmake .. \

-D ESPRESSO_BUILD_WITH_CUDA=ON \

-D CUDAToolkit_ROOT=/usr/local/cuda-12.0 \

-D CMAKE_CXX_FLAGS="-I/usr/include/x86_64-linux-gnu/c++/12 -I/usr/include/c++/12" \

-D CMAKE_CUDA_FLAGS="-I/usr/include/x86_64-linux-gnu/c++/12 -I/usr/include/c++/12 --cuda-path=/usr/local/cuda-12.0"

with compiler dependencies:

sudo apt install \

clang-19 clang-tidy-19 clang-format-19 llvm-19 libc++-19-dev \

libclang-rt-19-dev libomp-19-dev gcc-12 g++-12 libstdc++-12-dev

CC=nvc CXX=nvc++ CUDACXX=nvcc cmake .. \

-D ESPRESSO_BUILD_WITH_CUDA=ON \

-D CMAKE_CXX_FLAGS="--gcc-toolchain=gcc-12"

with compiler dependencies:

sudo apt install gcc-12 g++-12 libstdc++-12-dev

To print which toolchains and libraries are loaded by NVHPC, run:

echo 'int main() {}' > mwe.cpp

nvc++ -v -std=c++20 mwe.cpp

Please note that all CMake options and compiler flags that involve

/usr/local/cuda-* need to be adapted to your CUDA environment.

But they are only necessary on systems with multiple CUDA releases installed,

and can be safely removed if you have only one CUDA release installed.

Please also note that with Clang, you still need the GCC 12 toolchain.

The extra compiler flags in the Clang CMake command above are needed to pin

the search paths of Clang. By default, it searches trough the most recent

GCC version, which is GCC 13 on Ubuntu 24.04. It is not possible to install

the NVIDIA driver without GCC 13 due to a dependency resolution issue

(nvidia-dkms depends on dkms which depends on gcc-13).

On Ubuntu 26.04, only GCC <= 13 can be used with nvcc 12.4. Alternatively, the Clang toolchain can be used. If the NVIDIA HPC SDK is installed, the NVHPC toolchain can be used with GCC 13.

CC=gcc-15 CXX=g++-15 CUDAHOSTCXX=g++-13 CUDACXX=/usr/lib/nvidia-cuda-toolkit/bin/nvcc \

CMAKE_CXX_IMPLICIT_LINK_DIRECTORIES_EXCLUDE=/usr/lib/gcc/x86_64-linux-gnu/15 cmake .. \

-D ESPRESSO_BUILD_WITH_CUDA=ON \

-D CUDAToolkit_ROOT=/usr/lib/nvidia-cuda-toolkit

with compiler dependencies:

sudo apt install \

gcc-13 g++-13 libstdc++-13-dev \

gcc-15 g++-15 libstdc++-15-dev

CC=clang-20 CXX=clang++-20 CUDACXX=clang++-20 cmake .. \

-D ESPRESSO_BUILD_WITH_CUDA=ON \

-D CUDAToolkit_ROOT=/usr/lib/cuda \

-D CMAKE_CXX_FLAGS="-I/usr/include/x86_64-linux-gnu/c++/15 -I/usr/include/c++/15" \

-D CMAKE_CUDA_FLAGS="-I/usr/include/x86_64-linux-gnu/c++/15 -I/usr/include/c++/15 --cuda-path=/usr/lib/cuda"

with compiler dependencies:

sudo apt install \

clang-20 clang-tidy-20 clang-format-20 llvm-20 libc++-20-dev \

libclang-rt-20-dev libomp-20-dev gcc-15 g++-15 libstdc++-15-dev

CC=nvc CXX=nvc++ CUDACXX=nvcc cmake .. \

-D ESPRESSO_BUILD_WITH_CUDA=ON \

-D CMAKE_CXX_FLAGS="--gcc-toolchain=gcc-13"

with compiler dependencies:

sudo apt install gcc-13 g++-13 libstdc++-13-dev

To print which toolchains and libraries are loaded by NVHPC, run:

echo 'int main() {}' > mwe.cpp

nvc++ -v -std=c++20 mwe.cpp

2.2.1.2. Setting up a Jupyter environment¶

To run the samples and tutorials, start by installing the following packages:

sudo apt install ffmpeg

python3 -m pip install -c requirements.txt matplotlib pint tqdm

The tutorials are written in the Notebook Format [Kluyver et al., 2016] version 4.5 and can be executed by any of these tools:

To check whether one of them is installed, run these commands:

jupyter lab --version

jupyter notebook --version

code --version

If you don’t have any of these tools installed and aren’t sure which one to use, we recommend installing JupyterLab:

python3 -m pip install -c requirements.txt \

"jupyterlab>=4.3" nbformat nbconvert "lxml[html_clean]" jupyter_console

If you prefer the look and feel of Jupyter Classic, install the following extra package:

python3 -m pip install -c requirements.txt nbclassic

Alternatively, to use VS Code Jupyter, install the following extensions:

code --install-extension ms-python.python

code --install-extension ms-toolsai.jupyter

code --install-extension ms-toolsai.jupyter-keymap

code --install-extension ms-toolsai.jupyter-renderers

2.2.1.3. Requirements for visualizers¶

To install the OpenGL visualizer:

python3 -m pip install -c requirements.txt PyOpenGL

To install the ZnDraw visualizer:

python3 -m pip install -c requirements.txt zndraw

2.2.1.4. Requirements for building the documentation¶

To generate the Sphinx documentation, install the following packages:

python3 -m pip install -c requirements.txt \

sphinx sphinxcontrib-bibtex sphinx-toggleprompt sphinx-tabs

To generate the Doxygen documentation, install the following packages:

sudo apt install doxygen graphviz

2.2.2. Installing requirements on other Linux distributions¶

Please refer to the following Dockerfiles to find the minimum set of packages required to compile ESPResSo on other Linux distributions:

2.2.3. Installing requirements on Windows via WSL¶

To run ESPResSo on Windows, use the Linux subsystem (WSL). For that you need to

follow these instructions to install Ubuntu

start Ubuntu (or open an Ubuntu tab in Windows Terminal)

execute

sudo apt updateto prepare the installation of dependenciesoptional step: If you have a NVIDIA graphics card available and want to make use of ESPResSo’s GPU acceleration, follow these instructions to set up CUDA.

follow the instructions for Installing requirements on Ubuntu

Note on file system performance: when using WSL, avoid cloning or building

the repository on the mounted Windows file system (e.g., /mnt/c/Users/...).

This causes severe I/O performance degradation. Always clone and build within

the Linux filesystem (e.g., /home/user/espresso).

2.2.4. Installing requirements on macOS¶

The first step is to install a C++ compiler, such as Xcode [10]. Xcode is missing OpenMP, which is needed to enable shared-memory parallelization, but binaries are available from Homebrew (formula libomp) or from the “R for macOS Developers” project [11].

To install libraries, a package manager will be needed. While our instructions below are specific to Homebrew, they should be fairly easy to adapt for other package managers. If Homebrew isn’t available, it can be installed with these instructions, but bear in mind that it might conflict with other installed managers, such as MacPorts.

If Homebrew is already installed, resolve any problems reported by the command:

brew doctor

Install the following libraries:

brew install boost boost-mpi fftw gsl freeglut hdf5-mpi

For the last step, we will use the uv utility (installation instructions) to install the Python interpreter and all required Python packages in a virtual environment:

uv venv --python 3.13

. .venv/bin/activate

uv pip install -c requirements.txt \

cmake cython numpy scipy matplotlib tqdm packaging setuptools h5py \

PyOpenGL "jupyterlab>=4.3" nbformat nbconvert "lxml[html_clean]"

2.3. Quick installation¶

If you have installed the requirements (see section Requirements) in

standard locations, compiling ESPResSo is usually only a matter of creating a build

directory and calling cmake and make in it. See for example the command

lines below (optional steps which modify the build process are commented out):

mkdir build

cd build

cmake ..

#ccmake . // in order to add/remove features like ScaFaCoS or CUDA

make -j$(nproc)

This will build ESPResSo with a default feature set, namely

src/config/myconfig-default.hpp. This file is a C++ header file,

which defines the features that should be compiled in.

You may want to adjust the feature set to your needs. This can be easily

done by copying the myconfig-sample.hpp which has been created in

the build directory to myconfig.hpp and only uncomment

the features you want to use in your simulation.

The cmake command looks for libraries and tools needed by ESPResSo.

The application can only be built if CMake reports no errors.

The command make will compile the source code. Depending on the

options passed to the program, make can also be used for a number of

other things:

It can install and uninstall the program to some other directories. However, normally it is not necessary to actually install to run it:

make installIt can invoke code checks:

make checkIt can build this documentation:

make sphinx

When these steps have successfully completed, ESPResSo can be started with the command:

./pypresso script.py

where script.py is a Python script which has to be written by the user.

You can find some examples in the samples folder of the source code

directory. If you want to run in parallel, you should have compiled with an

MPI library, and need to tell MPI to run in parallel.

The actual invocation is implementation-dependent, but in many cases, such as

Open MPI and MPICH, you can use

mpirun -n 4 ./pypresso script.py

where 4 is the number of CPU cores to be used.

2.4. Features¶

This chapter describes the features that can be activated in ESPResSo. Even if possible, it is not recommended to activate all features, because this will negatively affect ESPResSo’s performance.

Most features can be activated in the configuration header myconfig.hpp

(see section myconfig.hpp: Activating and deactivating features).

To activate FEATURE, add the following line to the header file:

#define FEATURE

Some features cannot be manually enabled; they are instead automatically

enabled when a specific list of dependent features are enabled. For example,

MMM1D is automatically enabled when ELECTROSTATICS

and GSL are enabled. Please note that GSL is an external feature

and can only be enabled via a CMake option (see External features).

2.4.1. General features¶

ELECTROSTATICSThis enables the use of the various electrostatics algorithms, such as P3M.See also

DIPOLESThis activates the dipole-moment property of particles and switches on various magnetostatics algorithmsSee also

SCAFACOS_DIPOLESThis activates magnetostatics methods of ScaFaCoS.DIPOLE_FIELD_TRACKINGenable dipolar direct sum algorithms to calculate the total dipole field at particle positions.THERMAL_STONER_WOHLFARTHenable dipolar algorithms to integrates vritual sites that implement the thermal Stoner–Wohlfarth modelROTATIONSwitch on rotational degrees of freedom for the particles, as well as the corresponding quaternion integrator.See also

Note

When this feature is activated, every particle has three additional degrees of freedom, which for example means that the kinetic energy changes at constant temperature is twice as large.

THERMOSTAT_PER_PARTICLEAllows setting a per-particle friction coefficient for the Langevin and Brownian thermostats.ROTATIONAL_INERTIAAllows particles to have individual rotational inertia matrix eigenvalues. When not built in, all eigenvalues are unity in simulation units.EXTERNAL_FORCESAllows to define an arbitrary constant force for each particle individually. Also allows to fix individual coordinates of particles, keep them at a fixed position or within a plane.MASSAllows particles to have individual masses. When not built in, all masses are unity in simulation units.EXCLUSIONSAllows particle pairs to be excluded from non-bonded interaction calculations.BOND_CONSTRAINTTurns on the RATTLE integrator which allows for fixed lengths bonds between particles.VIRTUAL_SITESAllows the creation of pseudo-particles whose forces, torques, and orientations can be transferred to real particles. They don’t have mass, and their position is generally fixed in the simulation box or fixed to other particles.VIRTUAL_SITES_INERTIALESS_TRACERSAllows to use virtual sites as tracers by advecting them with a LB fluidVIRTUAL_SITES_RELATIVEVirtual sites are particles, the position and velocity of which is not obtained by integrating equations of motion. Rather, they are placed using the position (and orientation) of other particles. The feature allows for rigid arrangements of particles.See also

COLLISION_DETECTIONAllows particles to be bound on collision.

In addition, there are switches that enable additional features in the integrator or thermostat:

NPTEnables the NpT integration scheme.See also

ENGINEActivates swimming parameters for active particles (self-propelled particles)PARTICLE_ANISOTROPYAllows the use of non-isotropic friction coefficients in thermostats.

2.4.2. Fluid dynamics and fluid structure interaction¶

DPDEnables the dissipative particle dynamics thermostat and interaction.See also

LB_ELECTROHYDRODYNAMICSEnables the implicit calculation of electro-hydrodynamics for charged particles and salt ions in an electric field.

2.4.3. Interaction features¶

The following switches turn on various short ranged interactions (see section Isotropic non-bonded interactions):

TABULATEDEnable support for user-defined non-bonded interaction potentials.LENNARD_JONESEnable the Lennard-Jones potential.LENNARD_JONES_GENERICEnable the generic Lennard-Jones potential with configurable exponents and individual prefactors for the two terms.LJCOSEnable the Lennard-Jones potential with a cosine-tail.LJCOS2Same asLJCOS, but using a slightly different way of smoothing the connection to 0.WCAEnable the Weeks–Chandler–Andersen potential.GAY_BERNEEnable the Gay–Berne potential.HERTZIANEnable the Hertzian potential.MORSEEnable the Morse potential.BUCKINGHAMEnable the Buckingham potential.SOFT_SPHEREEnable the soft sphere potential.SMOOTH_STEPEnable the smooth step potential, a step potential with two length scales.BMHTF_NACLEnable the Born–Meyer–Huggins–Tosi–Fumi potential, which can be used to model salt melts.GAUSSIANEnable the Gaussian potential.HATEnable the Hat potential.

Some of the short-range interactions have additional features:

LJGEN_SOFTCOREThis modifies the generic Lennard-Jones potential (LENNARD_JONES_GENERIC) with tunable parameters.THOLESee Thole correction

2.4.4. Debug messages¶

Finally, there is a flag for debugging:

ADDITIONAL_CHECKSEnables numerous additional checks which can detect inconsistencies especially in the cell systems. These checks are however too slow to be enabled in production runs.

2.4.5. External features¶

External features cannot be added to the myconfig.hpp file by the user.

They are added by CMake if the corresponding dependency was found on the

system. Some of these external features are optional and must be activated

using a CMake flag (see Options and Variables).

CUDA: enable offloading to Nvidia GPUs for features that support it (see CUDA acceleration)FFTW: enables features relying on the fast Fourier transforms, such as the P3M method (see Coulomb P3M and Dipolar P3M)H5MD: enable parallel input/output to hdf5 files with H5MD specification (see Writing hdf5 files)WALBERLA: enable continuum-based solvers: lattice-Boltzmann method, diffusion-advection-reaction equations solver, and Poisson equation solver ifFFTWis enabled (see Lattice-Boltzmann and Electrokinetics)SCAFACOS: enables features from the ScaFaCoS library (see ScaFaCoS electrostatics, ScaFaCoS magnetostatics).GSL: enables features relying on the GNU Scientific Library, e.g.espressomd.cluster_analysis.Cluster.fractal_dimension()andespressomd.electrostatics.MMM1D.NLOPT: enable features relying on the nonlinear optimization library NLopt, e.g. Thermal Stoner–Wohlfarth.STOKESIAN_DYNAMICS: enable the Stokesian Dynamics propagator (see Stokesian Dynamics). Requires BLAS and LAPACK.SHARED_MEMORY_PARALLELISM: enable shared-memory parallelism (OpenMP, Kokkos, Cabana)CALIPER,VALGRIND,FPE: enable various instrumentation tools (see Instrumentation)

2.5. Configuring¶

2.5.1. myconfig.hpp: Activating and deactivating features¶

ESPResSo has a large number of features that can be compiled into the binary.

However, it is not recommended to actually compile in all possible

features, as this will slow down ESPResSo significantly. Instead, compile in only

the features that are actually required. A strong gain in speed can be

achieved by disabling all non-bonded interactions except for a single

one, e.g. LENNARD_JONES. For developers, it is also possible to turn on or off a

number of debugging messages. The features and debug messages can be

controlled via a configuration header file that contains C-preprocessor

declarations. Subsection Features describes all available features. If a

file named myconfig.hpp is present in the build directory when cmake

is run, all features defined in it will be compiled in. If no such file exists,

the configuration file src/config/myconfig-default.hpp will be used

instead, which turns on the default features.

When you distinguish between the build and the source directory, the configuration header can be put in either of these. Note, however, that when a configuration header is found in both directories, the one in the build directory will be used.

By default, the configuration header is called myconfig.hpp.

The configuration header can be used to compile different binary

versions of with a different set of features from the same source

directory. Suppose that you have a source directory $srcdir and two

build directories $builddir1 and $builddir2 that contain

different configuration headers:

$builddir1/myconfig.hpp:#define ELECTROSTATICS #define LENNARD_JONES

$builddir2/myconfig.hpp:#define LJCOS

Then you can simply compile two different versions of ESPResSo via:

cd $builddir1

cmake ..

make

cd $builddir2

cmake ..

make

To see what features were activated in myconfig.hpp, run:

./pypresso

and then in the Python interpreter:

import espressomd

print(espressomd.features())

2.5.2. cmake¶

In order to build the first step is to create a build directory in which

cmake can be executed. In cmake, the source directory (that contains

all the source files) is completely separated from the build directory

(where the files created by the build process are put). cmake is

designed to not be executed in the source directory. cmake will

determine how to use and where to find the compiler, as well as the

different libraries and tools required by the compilation process. By

having multiple build directories you can build several variants of ESPResSo,

each variant having different activated features, and for as many

platforms as you want.

Once you’ve run ccmake, you can list the configured variables with

cmake -LAH -N . | less (uses a pager) or with ccmake .. and pressing

key t to toggle the advanced mode on (uses the curses interface).

Example:

When the source directory is srcdir (the files where unpacked to this

directory), then the user can create a build directory build below that

path by calling mkdir srcdir/build. In the build directory cmake is to be

executed, followed by a call to make. None of the files in the source directory

are ever modified by the build process.

cd build

cmake ..

make -j$(nproc)

Afterwards ESPResSo can be run by calling ./pypresso from the command line.

2.5.3. ccmake¶

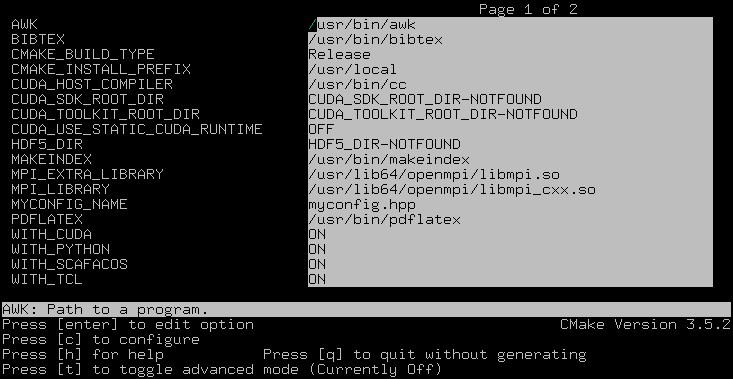

Optionally and for easier use, the curses interface to cmake can be used to configure ESPResSo interactively.

Example:

Alternatively to the previous example, instead of cmake, the ccmake executable is called in the build directory to configure ESPResSo, followed by a call to make:

cd build

ccmake ..

make

Fig. ccmake interface shows the interactive ccmake UI.

ccmake interface¶

2.5.4. Options and Variables¶

The behavior of ESPResSo can be controlled by means of options and variables

in the CMakeLists.txt file. Most options are boolean values

(ON or OFF). A few options are strings or semicolon-delimited lists.

The following options control features from external libraries:

ESPRESSO_BUILD_WITH_CUDA: Build with GPU support.ESPRESSO_BUILD_WITH_HDF5: Build with HDF5 support.ESPRESSO_BUILD_WITH_FFTW: Build with FFTW support.ESPRESSO_BUILD_WITH_SCAFACOS: Build with ScaFaCoS support.ESPRESSO_BUILD_WITH_GSL: Build with GSL support.ESPRESSO_BUILD_WITH_STOKESIAN_DYNAMICSBuild with Stokesian Dynamics support.ESPRESSO_BUILD_WITH_SHARED_MEMORY_PARALLELISM: Build with shared-memory parallelism support (OpenMP, Cabana, Kokkos, etc.)ESPRESSO_BUILD_WITH_WALBERLA: Build with waLBerla support.ESPRESSO_BUILD_WITH_WALBERLA_AVX: Build waLBerla with AVX kernels instead of regular kernels.ESPRESSO_BUILD_WITH_PYTHON: Build with the Python interface.

The following options control code instrumentation:

ESPRESSO_BUILD_WITH_VALGRIND: Build with Valgrind instrumentationESPRESSO_BUILD_WITH_CALIPER: Build with Caliper instrumentationESPRESSO_BUILD_WITH_MSAN: Compile C++ code with memory sanitizerESPRESSO_BUILD_WITH_ASAN: Compile C++ code with address sanitizerESPRESSO_BUILD_WITH_UBSAN: Compile C++ code with undefined behavior sanitizerESPRESSO_BUILD_WITH_COVERAGE: Generate C++ code coverage reports when running ESPResSoESPRESSO_BUILD_WITH_COVERAGE_PYTHON: Generate Python code coverage reports when running ESPResSo

The following options control how the project is built and tested:

ESPRESSO_BUILD_WITH_CLANG_TIDY: Run Clang-Tidy during compilation.ESPRESSO_BUILD_WITH_CPPCHECK: Run Cppcheck during compilation.ESPRESSO_BUILD_WITH_CCACHE: Enable compiler cache for faster rebuilds.ESPRESSO_BUILD_TESTS: Enable C++ and Python tests.ESPRESSO_BUILD_BENCHMARKS: Enable benchmarks.ESPRESSO_CTEST_ARGS(string): Arguments passed to thectestcommand (semicolon-separated list).ESPRESSO_TEST_TIMEOUT: Test timeout.ESPRESSO_ADD_OMPI_SINGLETON_WARNING: Add a runtime warning in the pypresso and ipypresso scripts that is triggered in singleton mode with Open MPI version 4.x on unsupported NUMA environments (see MPI installation requirements for details).ESPRESSO_MYCONFIG_NAME(string): Filename of the user-provided config fileMPIEXEC_PREFLAGS,MPIEXEC_POSTFLAGS(strings): Flags passed to thempiexeccommand in MPI-parallel tests and benchmarks.CMAKE_BUILD_TYPE(string): Build type. Default isRelease.CMAKE_CXX_FLAGS(string): Flags passed to the C++ compiler.CMAKE_CUDA_FLAGS(string): Flags passed to the CUDA compiler.CMAKE_CUDA_ARCHITECTURES(list): Semicolon-separated list of architectures to generate device code for.CUDAToolkit_ROOT(string): Path to the CUDA toolkit directory.

Most of these options are opt-in, meaning their default value is set to

OFF in the CMakeLists.txt file. These options can be modified

by calling cmake with the command line argument -D:

cmake -D ESPRESSO_BUILD_WITH_HDF5=OFF ..

When an option is enabled, additional options may become available.

For example with -D ESPRESSO_BUILD_TESTS=ON, one can specify

the CTest parameters with -D ESPRESSO_CTEST_ARGS=-j$(nproc).

Environment variables can be passed to CMake. For example, to select the Clang

compiler and specify which GPU architectures to generate device code for, use

CC=clang CXX=clang++ CUDACXX=clang++ CUDAARCHS="61;75" cmake .. -D ESPRESSO_BUILD_WITH_CUDA=ON.

When multiple versions of the CUDA library are available, the correct one can be

selected with CUDA_BIN_PATH=/usr/local/cuda-12.0 cmake .. -D ESPRESSO_BUILD_WITH_CUDA=ON

(with Clang as the CUDA compiler, it is also necessary to override its default

CUDA path with -D CMAKE_CUDA_FLAGS=--cuda-path=/usr/local/cuda-12.0).

2.5.4.1. Build types and compiler flags¶

The build type is controlled by -D CMAKE_BUILD_TYPE=<type> where

<type> can take one of the following values:

Release: for production use: disables assertions and debug information, enables-O3optimization (this is the default)RelWithAssert: for debugging purposes: enables assertions and-O3optimization (use this to track the source of a fatal error)Debug: for debugging in GDBCoverage: for code coverage

Cluster users and HPC developers may be interested in manually editing the

espresso_cpp_flags target in the top-level CMakeLists.txt file for

finer control over compiler flags. The variable declaration is followed

by a series of conditionals to enable or disable compiler-specific flags.

Compiler flags passed to CMake via the -D CMAKE_CXX_FLAGS option

(such as cmake . -D CMAKE_CXX_FLAGS="-ffast-math -fno-finite-math-only")

will appear in the compiler command before the flags in espresso_cpp_flags,

and will therefore have lower precedence.

Be aware that fast-math mode can break ESPResSo. It is incompatible with the

ADDITIONAL_CHECKS feature due to the loss of precision in the LB code

on CPU. The Clang 10 compiler breaks field couplings with -ffast-math.

The Intel compiler enables the -fp-model fast=1 flag by default;

it can be disabled by adding the -fp-model=strict flag.

ESPResSo currently doesn’t fully support link-time optimization (LTO).

2.5.5. Configuring without a network connection¶

Several external features in ESPResSo rely on external libraries that are downloaded automatically by CMake. When a network connection cannot be established due to firewall restrictions, the CMake logic needs editing.

External libraries are downloaded and included into the CMake project using

FetchContent.

The repository URLs can be found in the GIT_REPOSITORY field of the

corresponding FetchContent_Declare() commands. The GIT_TAG field

provides the commit. Clone these repositories locally and edit the ESPResSo

build system such that GIT_REPOSITORY points to the absolute path of

the clone. You can automate this text substitution by adapting the following command:

sed -ri 's|GIT_REPOSITORY +.+/([^/]+).git|GIT_REPOSITORY /work/username/\1|' CMakeLists.txt

2.6. Compiling, testing and installing¶

The command make is mainly used to compile the source code, but it

can do a number of other things. The generic syntax of the make

command is:

make [options] [target] [variable=value]

When no target is given, the target all is used. The following

targets are available:

allCompiles the complete source code.

checkRuns the full testsuite. More fine-grained testsuites are available, such as

check_unit_testsandcheck_python_skip_long.testDo not use this target, it is a broken feature (see issue #4370). Use

make checkinstead.cleanDeletes all files that were created during the compilation.

installInstall ESPResSo in the path specified by the CMake variable

CMAKE_INSTALL_PREFIX. The path can be changed by calling CMake withcmake .. -D CMAKE_INSTALL_PREFIX=/path/to/espresso. Do not usemake DESTDIR=/path/to/espresso installto install to a specific path, this will cause issues with the runtime path (RPATH) and will conflict with the CMake variableCMAKE_INSTALL_PREFIXif it has been set.doxygenCreates the Doxygen code documentation in the

doc/doxygensubdirectory.sphinxCreates the

sphinxcode documentation in thedoc/sphinxsubdirectory.tutorialsCreates the tutorials in the

doc/tutorialssubdirectory.docCreates all documentation in the

docsubdirectory (only when using the development sources).

A number of options are available when calling make. The most

interesting option is probably -j num_jobs, which can be used for

parallel compilation. num_jobs specifies the maximal number of

concurrent jobs that will be run. Setting num_jobs to the number

of available processors speeds up the compilation process significantly.

2.7. Troubleshooting¶

If you encounter issues when building ESPResSo or running it for the first time, please have a look at the Installation FAQ on the wiki. If you still didn’t find an answer, try the debugging tools documented in Debugging. If this still didn’t help, see Community support.